Webpack will give you great benefits if you're building a complex Frontend application with many non-code static assets such as CSS, images, fonts, etc. It is a static module bundler for modern JavaScript applications. While processing application, it internally builds a dependency graph from one or more entry points and then combines every module your project needs into one or more bundles of static assets.

To understand why we should use webpack, let's understand how we used JavaScript on the web before bundlers were a thing.

There are two ways to run JavaScript in a browser. First, include a script for each functionality. This solution is hard to scale because loading too many scripts can cause a network bottleneck. The second option is to use a big .js file containing all your project code, but this leads to problems in scope, size, readability and maintainability.

IIFEs (Immediately Invoked Function Expressions) solve scoping issues for large projects. When script files are wrapped by an IIFE, we can safely concatenate or safely combine files without worrying about scope collision.

The use of IIFEs led to tools like Make, Gulp, Grunt, Broccoli or Brunch. These tools are known as task runners, and they concatenate all your project files together.

However, changing one file means we have to rebuild the whole thing. Concatenating makes it easier to reuse scripts across files but makes build optimizations more difficult.

Even if we only use a single function from lodash, we have to add the entire library and then squish it together.

Webpack runs on Node.js, a JavaScript runtime that can be used in computers and servers outside a browser environment.

When Node.js was released, a new era started, and it came with new challenges. Now that JavaScript is not running in a browser, how are Node applications supposed to load new chunks of code? There are no html files and script tags that can be added to it.

CommonJS came out and introduced require, which allows you to load and use a module in the current file. This solved scope issues out of the box by importing each module as it was needed.

JavaScript is taking over the world as a language, as a platform and as a way to rapidly develop and create fast applications.

But there is no browser support for CommonJS. There are no live bindings. There are problems with circular references. Synchronous module resolution and loading is slow. While CommonJS was a great solution for Node.js projects, browsers didn't support modules, so bundlers and tools like Browserify, RequireJS and SystemJS were created, allowing us to write CommonJS modules that run in a browser.

What exactly is a Module Federation?

Module Federation gives us a new method of sharing code between applications. To understand it, we need to be able to compare it with the mechanisms we have had access to thus far. To do that, we need to envision a fairly common scenario. You have an enterprise system where you have two applications; the first is an internally facing content management system (CMS) that allows employees to format product information for display on the website. The second application is the externally facing website that displays that product information to the customer. The internal CMS system has a “preview” functionality that ideally matches exactly what the customer would see on the website. These two applications are managed by two separate teams. The ask from management is that both of these applications share the same rendering code so that the preview functionality in the CMS application is always in sync with the rendering code on the customer website.

With this in mind, let's understand about the options we have available to us today to share that rendering code.

What are Node Modules?

The most common route to sharing code in the Javascript ecosystem is via an NPM (Node Package Manager) module. In this model, either the CMS system or the external site would extract the rendering code into a new project (and potentially a new repository). Both of the applications would then remove any rendering code they have and replace it with the components in the NPM module.

The simple illustration below shows the connection between these two applications and the NPM module that holds the rendering components.

The advantage of this approach is that it’s highly controlled and versioned. To update the rendering components, the code needs to be modified, approved, and published to NPM. Both applications then need to bump their dependency versions and their own versions, then re-test and re-deploy.

Another advantage of this approach is that both of the applications that consume this library are in turn versioned and deployed as complete units, probably as a docker image. It’s not possible that the rendering components would change without a version bump and a re-deploy.

The primary disadvantage here is that it’s a slow process. Without automation, it’s up to both teams to inform each other that a new version is out and request an update. It’s also a big cognitive shift for the engineers on the project when they need to make a change to the rendering components. Often times, it will mean that the engineer will need to change both the host application and the rendering components in order to close a ticket.

Depending on the size of the host application and the rendering components, it can be frustrating waiting on builds, tests, and deployment. Back-to-back, where the host application only needed to bump a version. During urgent production failures, hotfixes and mitigation can be challenging, which could impact revenue.

Another approach that has emerged in the last few years is to use a Micro-frontend approach.

Why Micro Frontend is so promising and futuristic?

In the Micro-frontend (Micro-FE) model, the rendering code is again extracted into a new project, but the code is consumed either on the client or on the server at runtime. This is illustrated below as a dotted line.

To get this going, the Rendering Micro-FE project packages its source code as a runtime package. The popular Micro-FE SingleSPA calls these packages “parcels”. That code is then deployed out to a static hosting service like Amazon’s S3. The CMS application and the external site then download this code at runtime and use it to render the content.

The advantage of this approach is that the Rendering Micro-FE code can be updated without either of the consuming applications redeploying. In addition, both applications are always in sync in terms of how they render the code since they are always downloading the latest version.

The downside is that just like in the NPM model, the rendering code needs to be extracted from the original application in order for this to work. It also needs to be packaged in a way where it works with the Micro-FE framework, which might require substantial modification. In the end, it might not end up looking like a run of the mill React component.

Another downside is that an update to the rendering code might end up breaking either of these applications. Therefore, additional monitoring will need to be added to make this work.

Performance and code duplication is challenging to manage. As either consumer may already have a dependency on the Micro-FE uses. This can drastically degrade load and execution times, as there is a lot more code that needs to be processed over and over. As each application has a start-up overhead, the boot time can put pressure on a machine's ability to achieve the first meaningful paint (an important metric of page speed).

One workaround to alleviate code duplication is to externalize commonly used packages, having it loaded independently by a consuming application. However, this can become a very manual process and can be risky as there is a highly centralized reliance on that common code always being there. This can defeat the purpose of Micro-FE's principle of decentralization. Upgrading to newer released copies is challenging as all Micro-FEs need to be compatible and deployed at the exact same time.

Module Federation presents us with a new option that makes it far more appealing than either of these two approaches.

Why Module Federation is the key to success.

With Module Federation, either the CMS application or the Website, simply “exposes” its rendering code using the Webpack 5 ModuleFederationPlugin. And the other application then consumes that code at runtime, again using the plugin. This is shown in the illustration below:

In this new architecture, the CMS application specifies the external website as “remote”. It then imports the components just as it would any other React component and invokes them just as it would if it had the code internally. However, it does this at runtime so that when the external website deploys a new version, the CMS application consumes that new code on the next refresh. Distributed code is deployed independently without any required coordination with other codebases that consume it. The consuming code doesn’t have to change to use the newly deployed distributed code.

There are several advantages to this approach.

- Code remains in-place - For one of the applications, the rendering code remains in-place and is not modified.

- No framework - As long as both applications are using the same view framework, then they can both use the same code.

- No code loaders - Micro-FE frameworks are often coupled with code loaders, like SystemJS, that work in parallel with the babel and Webpack imports that engineers are used to. Importing a federated module works just like any normal import. It just happens to be remote. Unlike other approaches, Module Federation does not require any alterations to existing codebases. There isn’t the learning curve that would come with a Micro-FE framework.

- Applies to any JavaScript - Where Micro-FE frameworks work on UI components, Module Federation can be used for any type of JavaScript; UI components, business logic, i18n strings, etc. Any JavaScript can be shared.

- Applies beyond JavaScript - While many frameworks focus heavily on the JavaScript aspects, Module Federation will work with files that Webpack is currently able to process today such as images, JSON, and CSS. If we can require it, it can be federated.

- Universal - Module Federation can be used on any platform that uses the JavaScript runtime such as Browser, Node, Electron, or Web Worker. It also does not require a specific module type. Many frameworks require use of SystemJD or UMD. Module Federation will work with any type currently available, including AMD, UMD, CommonJS, SystemJS, window variable and so on.

Just as with any architecture, there are some disadvantages and the primary among those is the run-time nature of the linkage. Just as in the Micro-FE example, the application is not complete without the rendering code, which is loaded at runtime. If there are issues with loading that code, then that can result in outages. In addition, bugs in the shared code easily result in either or both of the consuming applications being taken down.

What is the relation between Module Federation and Micro-Frontend framework?

Module Federation and Micro-Frontend frameworks cover a lot of the same ground and are complementary. The primary purpose of Module Federation is to share code between applications. The primary purpose of Micro-FE frameworks is to share UI between applications. A subtle, but important, difference.

A Micro-FE framework has two jobs; first, load the UI code onto the page and second, integrate it in a way that’s platform agnostic. Meaning that a Micro-FE framework should be able to put React UI components on a Vue page, or Svelte components in a React page. That’s a nifty trick and frameworks like SingleSPA and OpenComponents do a great job at that.

Module Federation, on the other hand, has one job - get JavaScript from one application into another. This means that it can be used to handle the code loading for a Micro-FE framework. In fact, the SingleSPA documentation has instructions on how to do just that very thing. What Module Federation can do that a Micro-FE framework cannot is load non-UI code onto the page, for example, business logic or state management code. A simple way to think about Module Federation is; it makes multiple independent applications work together to look and feel like a monolith, at runtime.

Before Module Federation, Micro FE frameworks would use custom loaders or excellent off-the-shelf loaders, like SystemJS. Now that we have Module Federation, the Micro-FE frameworks are starting to adopt that instead of these other loaders.

Whether or not to use a Micro-FE framework comes down to one question- do you want to use different view frameworks (e.g., React, Vue, Svelte, vanilla JS, etc.) on the same page together? If so, then you’ll want to use a Micro-FE framework and use Module Federation underneath that to get the code onto the page. If you are using the same view framework in all your applications, then you should be able to get by using Module Federation as your “Micro-FE framework” because you don’t need any additional code to, for example, use external React components in your current React application. SingleSPA also offers the ability to route between Micro-FEs or mount different frameworks on a single page via an opinionated and organized convention. You would need to use one of the many great routing solutions (e.g., react-router) to get the same features in a Module Federation system.

Why Monorepo != Monolith

A monorepo is a single repository containing multiple distinct projects, with well-defined relationships.

Consider a repository with several projects in it. We definitely have “code colocation”, but if there are no well-defined relationships among them, we would not call it a monorepo.

Likewise, if a repository contains a massive application without division and encapsulation of discrete parts, it's just a big repo. You can give it a fancy name like "garganturepo," but it's not a monorepo.

In fact, such a repo is prohibitively monolithic, which is often the first thing that comes to mind when people think of monorepos. A good monorepo is the opposite of monolithic.

Monorepo

The opposite of monorepo is "polyrepo". A polyrepo is the current standard way of developing applications: a repo for each team, application, or project. And it's common that each repo has a single built artifact, and simple built pipeline.

Polyrepo

The industry has moved to the polyrepo way of doing things for one big reason: team autonomy. Teams want to make their own decisions about what libraries they'll use, when they'll deploy their apps or libraries, and who can contribute to or use their code.

Because this autonomy is provided by isolation, and isolation harms collaboration. More specifically, these are common drawbacks to a polyrepo environment:

- Cumbersome code sharing - To share code across repositories, you'd likely create a repository for the shared code.

- Significant code duplication - No one wants to go through the hassle of setting up a shared repo, so teams just write their own implementations of common services and components in each repo.

- Costly cross-repo changes to shared libraries and consumers - Consider a critical bug or breaking change in a shared library: the developer needs to set up their environment to apply the changes across multiple repositories with disconnected revision histories.

- Inconsistent tooling - Each project uses its own set of commands for running tests, building, serving, linting, deploying, and so forth.

Monorepo helps to solve all of the problems faced by Polyrepo:

- No overhead to create new projects - Use the existing CI setup, and no need to publish versioned packages if all consumers are in the same repo.

- Atomic commits across projects - Everything works together at every commit. There's no such thing as a breaking change when you fix everything in the same commit.

- One version of everything - No need to worry about incompatibilities because of projects depending on conflicting versions of third party libraries.

- Developer mobility - Get a consistent way of building and testing applications written using different tools and technologies. Developers can confidently contribute to other teams’ applications and verify that their changes are safe.

Why the world is in favor of Monorepo tools Nx?

Monorepo is more than code & tools. A monorepo changes your organization and the way you think about code. By adding consistency, lowering the friction in creating new projects and performing large scale refactoring, by facilitating code sharing and cross-team collaboration, it'll allow your organization to work more efficiently.

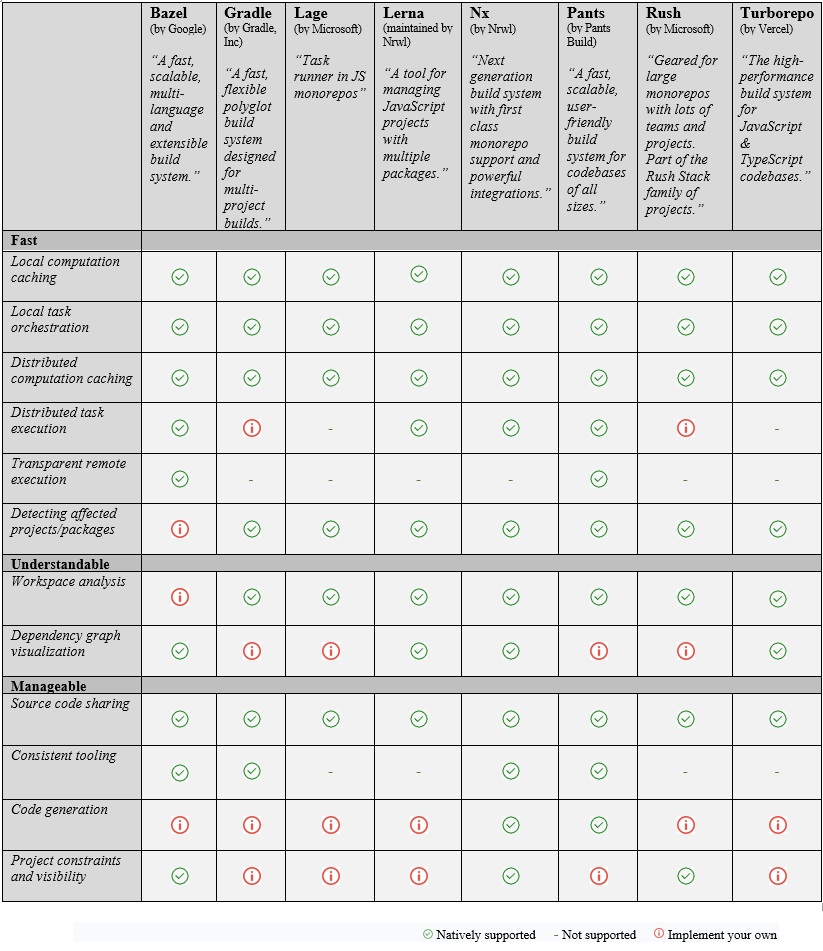

Monorepos have a lot of advantages, but to make them work you need to have the right tools. As your workspace grows, the tools have to help you keep it fast, understandable and manageable.

Each tool fits a specific set of needs and gives you a precise set of features.

Depending on your needs and constraints, we'll help you decide which tools best suits you.

Author

Alin Bhattacharyya

Alin Bhattacharyya is a certified Enterprise Architect, heading the Omnichannel Practice and working as "Associate Vice President" in Coforge. He has over 20 years of experience in Software Engineering, Web and Mobile Application Development, Product Development, Architecture Design, Media Analysis, AI\ML and Technology Management. His vast experience in Designing Solutions, creating Accelerators using Artificial Intelligence, Client Interactions, Onsite-Offshore Model Management, Research & Development and developing POC’s in new technologies allow him to have a well-rounded perspective of the industry.

-1.png?width=1813&height=490&name=stock-photo-ai-artificial-intelligence-business-woman-using-ai-technology-for-data-analysis-coding-computer-2359936611%201%20(1)-1.png)