In today’s digital-first banking ecosystem, timely communication with customers is key. One of the most crucial aspects of this communication is the delivery of real-time financial alerts. Whether it's a debit from an account, a credit transaction, or more complex financial activities, banks must ensure customers are notified instantly and accurately.

Customers can choose the alerts they wish to receive, such as low-balance warnings or high-value deposits, and select their preferred channels, like SMS, email, or push notifications. Traditionally, banks have relied on third-party platforms such as Finacle Alerts or built their in-house solutions using mainframes or rule engines. However, these approaches come with flexibility, latency, and cost trade-offs, mainly due to heavy database dependency for fetching customer preferences and demographic data.

Apache Kafka emerges as a highly effective solution for overcoming these limitations and embracing a more agile, real-time, event-driven architecture.

Why Apache Kafka?

Apache Kafka is a distributed event streaming platform renowned for its low-latency data processing and high scalability. With Kafka Streams, a client library for building real-time applications and microservices, developers can create sophisticated alerting systems that are fault-tolerant, scalable, and easy to maintain.

Key Benefits of Kafka Streams

- Lightweight and embeddable in standard Java applications

- No external dependencies beyond Kafka itself

- Fault-tolerant local state support for fast, stateful operations

- Exactly-once processing guarantees—even in case of failures

- Millisecond-level processing latency with event-time-based windowing

- A robust set of stream processing primitives, including high-level DSL and low-level Processor APIs

Building a Real-Time Financial Alert System with Kafka Streams

Step 1: Customer Subscription

Customers subscribe to desired alerts and update their contact details using mobile or internet banking. They can personalize thresholds and choose delivery channels. Examples include:

- “Notify me via SMS when my balance exceeds $100.”

- “Send an email if a deposit exceeds $1,000.”

These preferences and demographic details are stored in a backend database.

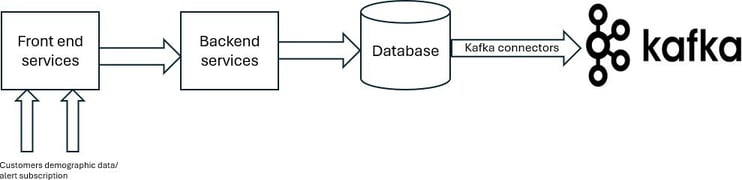

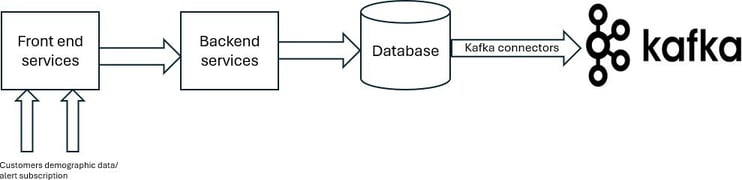

Step 2: Data Ingestion

Kafka Connectors (e.g., JDBC Connectors) monitor database logs for insert/update events using change data capture (CDC). These updates are published to Kafka topics. Kafka’s log compaction ensures that only the latest updates are retained for accurate and up-to-date processing.

Step 3: Capturing Financial Events

Financial events include transactions such as card swipes, account debits, or customer activity on mobile apps and web portals (e.g., filling out a loan application). These can be ingested into Kafka via CDC, MQ source connectors, or clickstream trackers.

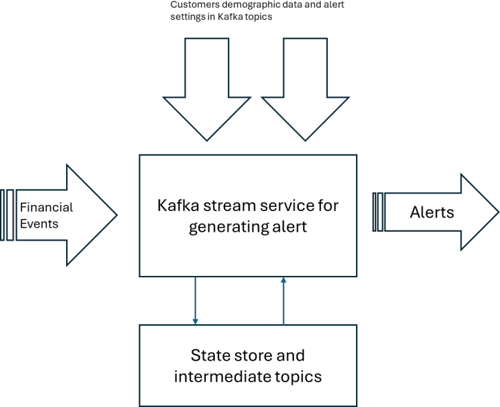

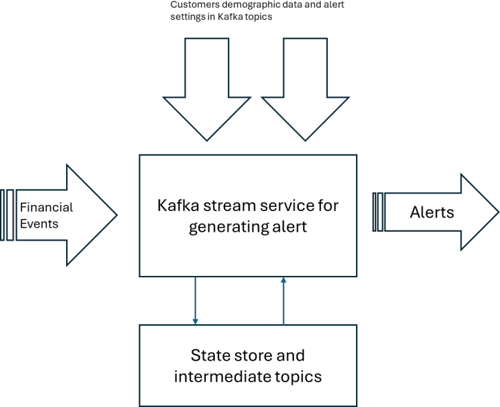

Step 4: Real-Time Alert Processing

Kafka Streams joins the customer preferences (stored as KTables) with incoming financial events. If a condition is met, such as a balance drop below a set threshold, an alert is generated and routed to the customer’s chosen channel.

Kafka Streams can also handle stateful computations, such as:

- “Trigger an alert if there are more than five-card transactions within the last hour.”

This is achieved using sliding window operations and state stores to monitor event trends in real-time.

The Advantages Are Clear

- Flexibility to tailor solutions to unique business cases

- Speed due to in-memory stream processing (no database hits at runtime)

- Real-time notifications without batch processing

- Scalability to manage millions of events per second

- Fault Tolerance thanks to Kafka’s distributed architecture

- Seamless Integration with analytics platforms and external systems

Unlocking New Possibilities

Beyond alerts, Apache Kafka can power personalized financial experiences:

- Insightful analytics using integrations with Apache Spark

- Cashback or reward offers based on spending behaviors

- Proactive marketing, like offering deals when customers frequently shop with a particular merchant

These capabilities enable banks to move from reactive communications to proactive customer engagement.

Conclusion

In a hyper-competitive financial landscape, the ability to send real-time, personalized, and meaningful notifications is no longer a luxury - it’s a necessity. With its robust streaming and event-driven architecture, Apache Kafka offers the flexibility and scalability needed to keep up with evolving customer expectations and regulatory demands.

Coforge brings deep expertise in building scalable, real-time financial systems leveraging Apache Kafka and other modern data platforms. With a strong banking and financial services foundation, Coforge can help you design and implement next-gen alerting systems that drive real-time engagement, reduce operational overhead, and unlock new avenues for customer delight and business growth. From architecture to deployment, we ensure your transformation is seamless, secure, and future-ready.

Need help? Contact our Financial Services experts to learn about real-time financial alerts using Apache Kafka Streams.