Introduction

This document is intended to focus on different data acquisition technologies. Data being the new ‘Oil’, drives every other aspect of the industry be it Modeling, Machine Learning, Analytics, Visualization, etc. Everything starts form data. Data acquisition is not a technique anymore its technology.

There are four major methods of data acquisition

- Transformation.

- Sharing.

- Extraction

- Collection.

Transformation

Data acquisition is not new to industry, most of the times huge legacy data is stored in unstructured way in forms of feeds, logs, text and image documents. The amount of data is so wide that it can’t be ignored. In order to utilize legacy data, transformation process is required. Another scenario for data transformation can be extending the time series data for better analysis. Interpolation and Extrapolation are common techniques that come handy.

Sharing

Shared data includes provisioned concerned access to organized data that organization is collecting with consent of sharing. This kind of data is subject to Data Sharing Agreements and Information Quality Act. Data sharing source needs to be visible, accessible, understandable, and credible within acceptable thresholds for quality, integrity, and security.

Extraction

Web is the ultimate but segregated source of information. Information is available in the form of web pages, documents, images and visuals. In order to use this, business needs to extract information from various sources. Web crawlers, text converters, image classifiers are few techniques to accomplish the same.

Collection

Data collection refers to periodic data from various sources, can be an IoT sensor network, online surveys, feedback forms, etc. Data collection is an area where cost savings mechanisms are needed. For instance, Global Positioning Systems and mobile units are now being used to take field data and enter them directly from the source, IoT sensor network once deployed can send periodic data directly to collection site. The problem remains that quality data be collected initially at the source (where data can be correlated directly with observation), where the strictest controls should be placed.

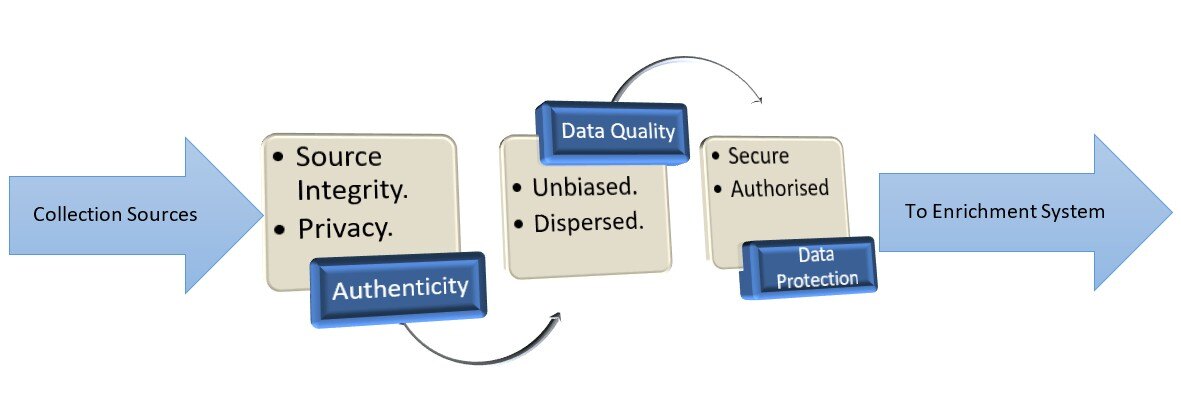

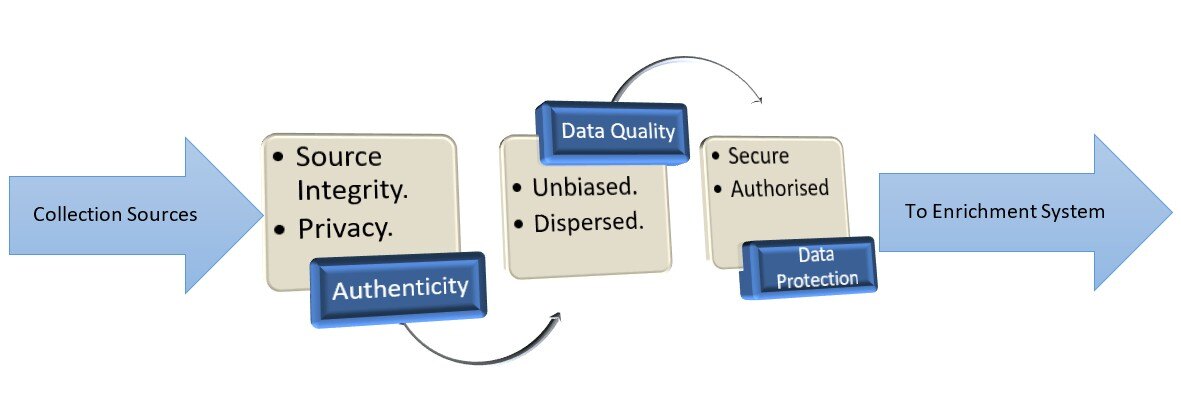

Collection Process

Data acquisition is just not about collecting data, it has its own ghosts, data neutrality, authenticity, protection and enrichment. Data collection goes through series of processes before data can be used to feed depended systems.

Authenticity – Authenticity of the source is of utmost important as it can lead to unusual inference.

Data Quality – Data should be neutral along all phases, biased data can lead to biased systems. A typical example of biased data is Amazon’s hiring system, it was fed with male biased resume and was trained on the same. In time the company realized that most of the female employees were filtered during the interview process. On further drilling the culprit was their AI based hiring algorithm and in turns the training data.

Data Protection- No one wants his financial statements to be published online or one’s medical history. Sensitive data should be well protected with authorization and proper measure should be placed to ensure safe data collection and storage.