In the age of Artificial Intelligence (AI), where algorithms hold the reins of decision-making, a pressing concern overshadows the promises of a smarter, more equitable future — the spectre of bias. As we usher in an era where machines wield the power to analyse data, draw conclusions, and make predictions, the question of fairness looms large. Behind the seemingly impartial facade of AI systems lie complex webs of historical prejudices, social inequities, and systemic biases, intricately woven into the very fabric of the datasets that fuel these intelligent algorithms.

The journey into the heart of AI bias begins with an exploration of the problem's roots. Every line of code, every neural network, is birthed from data – a reflection of our world's triumphs, struggles, and, unfortunately, its deeply ingrained biases. As we entrust machines with increasingly critical decisions, from hiring processes to approving loans to proving insurance claims to criminal justice determinations, the ethical imperative to confront and rectify bias becomes paramount.

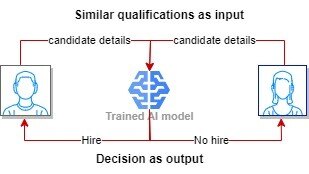

Assume an AI hiring tool sorting through resumes, ostensibly designed to identify the best candidate based on merit alone. Yet, hidden within the binary decisions and complex algorithms lies the potential for discrimination. Not known to its creators, the tool may inadvertently favor candidates from certain demographics, echoing the biases inherent in historical hiring data. This is not a hypothetical scenario; it is a stark reality that underscores the urgency of addressing bias in AI.

Beyond the realms of hiring, bias manifests itself in multifaceted ways. The consequences are profound and far-reaching, impacting individuals and communities in ways that demand our attention and scrutiny.

As we embark on this exploration of bias in AI, we delve into the nuanced dimensions of its manifestations. This blog is the first of the three in the journey of understanding what Bias is, how we identify them and finally how to mitigate them using the techniques provided.

Overview

In the ever-evolving realm of AI, the promise of unbiased decision-making has been a beacon of hope. However, the reality is far from utopian. Bias, deeply ingrained in the data we feed into AI models, casts a long shadow over the integrity of their predictions. This blog aims to shed light on the pervasive issue of bias in AI, exploring its manifestations.

At the heart of the AI bias dilemma is the data on which these intelligent systems are trained. Our world is brimming with historical biases, prejudices, and societal inequities, all of which find their way into the datasets that fuel AI algorithms. Whether it's gender, race, socioeconomic status, or other facets of human identity, biases present in the data can perpetuate and even exacerbate existing inequalities.

Manifestations of Bias in AI predictions:

- Algorithmic Discrimination: At the forefront of AI bias is algorithmic discrimination, where machine learning models unintentionally perpetuate and sometimes exacerbate existing societal inequalities. For instance, predictive policing algorithms, designed to enhance law enforcement strategies, illustrate algorithmic discrimination in action. These systems often rely on historical crime data that reflects biased policing practices, leading to overrepresentation of arrests in certain communities. As a result, the algorithms learn and perpetuate these biases, disproportionately targeting specific neighbourhoods. The consequences include increased scrutiny and surveillance in already over-policed areas, contributing to a cycle of discrimination. This example emphasizes the urgent need for scrutinizing training data and refining algorithms to prevent the amplification of systemic biases in law enforcement practices.

- Representation Bias: Representation bias occurs when the training data fails to adequately represent the diversity of real-world scenarios. Imagine a facial recognition system trained predominantly on data from light-skinned individuals; such a model may struggle to accurately recognize faces with darker skin tones, leading to skewed outcomes. Representation bias hampers the model's ability to generalize effectively across different demographic groups.

- Stereotyping and Cultural Biases: AI models are susceptible to inadvertently learning and perpetuating stereotypes present in the training data. For instance, a natural language processing model may associate certain professions with specific genders, reflecting societal biases. This can lead to biased predictions, reinforcing and perpetuating harmful stereotypes, particularly in language-based applications.

- Sampling Bias: Sampling bias arises when the data used for training is not representative of the entire population. In healthcare, for example, if a diagnostic AI model is trained on data primarily from urban populations, it may not generalize well to rural healthcare scenarios, leading to disparities in diagnostic accuracy between different regions.

- Temporal Bias: Temporal bias emerges when the training data becomes outdated or fails to reflect evolving societal norms. Consider an AI-driven loan approval system trained on historical data that inadvertently discriminates against certain demographics. As societal attitudes toward creditworthiness evolve, the model may perpetuate outdated biases, hindering financial inclusivity.

- Interaction Bias: Interaction bias occurs when the AI system's interactions with users or real-world entities inadvertently reinforce existing biases. This can be observed in recommendation systems, where users are consistently presented with content aligning with their existing preferences, creating information bubbles, and limiting exposure to diverse perspectives.

- Labelling Bias: Labelling bias stems from inaccuracies or biases in the labelling of training data. In image recognition, for example, if images are labelled based on subjective criteria, the model may learn biased associations. This was evident in a case where an image recognition system misclassified images of people with darker skin tones as "unprofessional," reflecting underlying biases in the training data.

Impact of Bias

- Legal and Regulatory Consequences: Organizations deploying biased AI may face legal and regulatory consequences, including fines and penalties. As governments and regulatory bodies increasingly focus on ethical AI, entities found in violation of fairness standards may be subject to legal action.

- Reinforcement of Social Inequities: AI systems trained on biased data can perpetuate and reinforce existing social inequalities. If historical biases are present in the training data, the AI model may inadvertently learn and reproduce these biases, exacerbating disparities in areas such as employment, healthcare, and criminal justice.

- Discrimination and Unfair Treatment: Bias in AI can lead to discriminatory outcomes, affecting individuals or groups based on attributes such as race, gender, or socioeconomic status. This can result in unfair treatment in various contexts, from automated decision-making in hiring processes to predictive policing.

- Underrepresentation and Exclusion: If AI systems are trained on non-representative data, they may struggle to accurately represent or include diverse perspectives. This can lead to underrepresentation or exclusion of certain groups, perpetuating stereotypes and limiting opportunities for individuals who fall outside the normative patterns in the training data.

- Loss of Privacy: Biased AI systems can contribute to privacy concerns, especially in applications like facial recognition. If these systems exhibit demographic or racial biases, individuals may face increased scrutiny or surveillance, impacting their right to privacy and personal autonomy.

- Erosion of Trust: The discovery of bias in AI models can erode public trust in the technology and the organizations deploying it. Users may become skeptical of AI systems, particularly if they perceive these systems as contributing to discrimination or unfair practices.

- Inaccurate Decision-Making: Bias in AI can compromise the accuracy and reliability of decision-making. In scenarios where biased predictions influence critical decisions, such as loan approvals or medical diagnoses, the consequences can be detrimental for individuals relying on these outcomes.

- Inequitable Access to Opportunities: Biased AI can limit access to opportunities for certain individuals or groups. For example, biased hiring algorithms may disadvantage qualified candidates from underrepresented demographics, perpetuating societal disparities in employment.

- Resistance and Backlash: Biased AI systems may face resistance and backlash from users, advocacy groups, and the wider community. This can manifest in protests, public outcry, or calls for increased transparency and accountability in AI development and deployment.

Penalties

The penalties levied due to bias in AI can take various forms and may vary depending on the severity of the bias, the impact on individuals or groups, and the legal and regulatory frameworks in place. Here are some potential consequences and penalties associated with bias in AI:

- Legal Action: In cases where biased AI systems lead to discriminatory outcomes and harm individuals or groups, legal action may be taken. Lawsuits can be filed against the organizations responsible for deploying biased AI, seeking compensation for damages and demanding corrective measures.

- Regulatory Fines: Regulatory bodies, such as data protection agencies or entities specifically overseeing AI practices, may impose fines on organizations that deploy biased AI systems. These fines are meant to serve as deterrents and encourage compliance with established ethical and legal standards.

- Reputational Damage: Instances of bias in AI can lead to significant reputational damage for the organizations involved. Public trust can erode, affecting customer loyalty and stakeholder confidence. Negative publicity may arise, impacting the brand's image and market standing.

- Loss of Business Opportunities: Organizations with biased AI systems may face consequences in terms of lost business opportunities. Clients, partners, or customers may be hesitant to engage with entities known for deploying technology that exhibits bias, potentially leading to a decline in business relationships.

- Corrective Measures Mandates: Regulatory bodies or legal settlements may require organizations to implement specific corrective measures to address bias in their AI systems. This could involve restructuring the AI models, revising training data, or incorporating fairness-enhancing techniques.

- Compliance Audits: Organizations found guilty of deploying biased AI may be subjected to compliance audits to ensure they adhere to ethical and legal standards. These audits may be conducted by regulatory bodies or independent third parties to assess ongoing compliance efforts.

- Loss of Government Contracts or Partnerships: Entities that supply biased AI systems to government agencies may face the revocation of contracts or partnerships. Governments are increasingly prioritizing ethical considerations in AI deployment, and bias may lead to the termination of agreements.

- Industry Standards and Certification Impact: In sectors with established industry standards or certifications, bias in AI could jeopardize an organization's compliance status. Loss of certification may result in exclusion from industry networks and collaborations.

- Educational and Awareness Requirements: Penalties may also include requirements for organizations to invest in educational initiatives and awareness campaigns focused on responsible AI practices. This aims to prevent future occurrences of bias and promotes a culture of ethical AI development.

It's crucial for organizations to proactively address bias in AI to mitigate these potential penalties, fostering a culture of responsible AI development and ethical considerations.

Understanding the intricate web of biases woven into AI systems is the first step toward developing responsible and equitable technology. As we navigate the complex landscape of algorithmic decision-making, it is imperative to critically examine each type of bias, acknowledging its real-world implications and working tirelessly toward mitigating these biases for a more just and inclusive future.

About Coforge Quasar Responsible AI

Coforge Quasar Responsible AI plays a crucial role in model risk management across various industries. The Responsible AI platform seamlessly integrates with existing IT infrastructure, offering a centralized model inventory for effective governance. Its rigorous model development process ensures thorough documentation and independent validation, enhancing trust and mitigating risks. Coforge Quasar Responsible AI streamlines model implementation, conducts continuous monitoring, and prioritizes ethical considerations, fostering a culture of Responsible AI. Overall, it enables compliance and promotes transparency, reliability, and fairness in AI initiatives across diverse sectors.

To learn more about Quasar Responsible AI, please visit https://www.coforge.com/services/ai or email us at GenAI@coforge.com

About Coforge

Coforge is a global digital services and solutions provider, that enables its clients to transform at the intersect of domain expertise and emerging technologies to achieve real-world business impact.

We can help refine your problem statement, crystallize the benefits, and provide concrete solutions to your problems in a collaborative model.

We would love to hear your thoughts and use cases. Please reach out to Digital Engineering Team to begin a discussion.

Authors

Siddharth Vidhani

Siddharth Vidhani is an Enterprise Architect in Digital Engineering of Coforge Technologies. He has more than 19 years of experience working in fortune 500 product companies related to Insurance, Travel, Finance and Technology. He has a strong technical leadership experience in the field of software development, IoT, and cloud architecture (AWS). He is also interested in the field of Artificial Intelligence (Machine Learning, Deep Learning - NLP, Gen AI, XAI).

Siddharth Vidhani is an Enterprise Architect in Digital Engineering of Coforge Technologies. He has more than 19 years of experience working in fortune 500 product companies related to Insurance, Travel, Finance and Technology. He has a strong technical leadership experience in the field of software development, IoT, and cloud architecture (AWS). He is also interested in the field of Artificial Intelligence (Machine Learning, Deep Learning - NLP, Gen AI, XAI).

Related reads.

About Coforge.

We are a global digital services and solutions provider, who leverage emerging technologies and deep domain expertise to deliver real-world business impact for our clients. A focus on very select industries, a detailed understanding of the underlying processes of those industries, and partnerships with leading platforms provide us with a distinct perspective. We lead with our product engineering approach and leverage Cloud, Data, Integration, and Automation technologies to transform client businesses into intelligent, high-growth enterprises. Our proprietary platforms power critical business processes across our core verticals. We are located in 23 countries with 30 delivery centers across nine countries.