Introduction

A decision guide to determine if and when you might need a service mesh

Microservice architectures are increasingly being embraced by enterprise organizations due to their ability to offer greater agility through smaller, more focused services compared to monolithic architectures. Nevertheless, these flexible implementations come with certain challenges. As the number of microservices in the architecture grows, the complexity of network communication also increases. Additionally, the governance and security mechanisms implemented for microservice interactions often involve custom-coded logic, which adds further complexity. Another challenge arises from the fact that multiple development teams employ different programming languages to build microservices and additional top-up logic. Consequently, when these services are deployed to multiple environments, the organization's services become distributed, lacking centralized industry standards.

Below are some of the issues encountered with microservice architecture:

- Service discovery is often decentralized rather than centralized. This decentralized approach can make it challenging to identify and establish consistent service-level definitions and standards across the system.

- Each service layer change required for any policy update for the organization.

- Additional load to application layer.

- New or additional feature for proxy layer need a lot of effort from development to support.

- Each service will need to have same piece of code to be written in different language to support the proxy layer to secure the internal application.

What is Service Mesh?

The concept of a service mesh has been introduced to address the challenges that come with microservice implementations where inter microservice communication required inside an organization which abstract the governance considerations behind microservices. It is a dedicated infrastructure layer for facilitating service to service communications between services or microservices using a proxy, called as sidecar that runs alongside each service. Service Mesh controls the delivery of service requests to other services, performs load balancing, encrypts data, and discovers other services. A dedicated communication layer can provide numerous other benefits, such as providing observability into communications, network resiliency, providing secure connections, authentication, automating quality of services and back off for failed requests, telemetry data between each services and a lot more which can be removed from the application layer and out sourced to a centralized service mesh layer. In the cloud native ecosystem, an application might have hundreds of services and each service might have thousands of instances and each of those instances might be in a constantly changing state as they are dynamically scheduled by an orchestrator like Kubernetes.

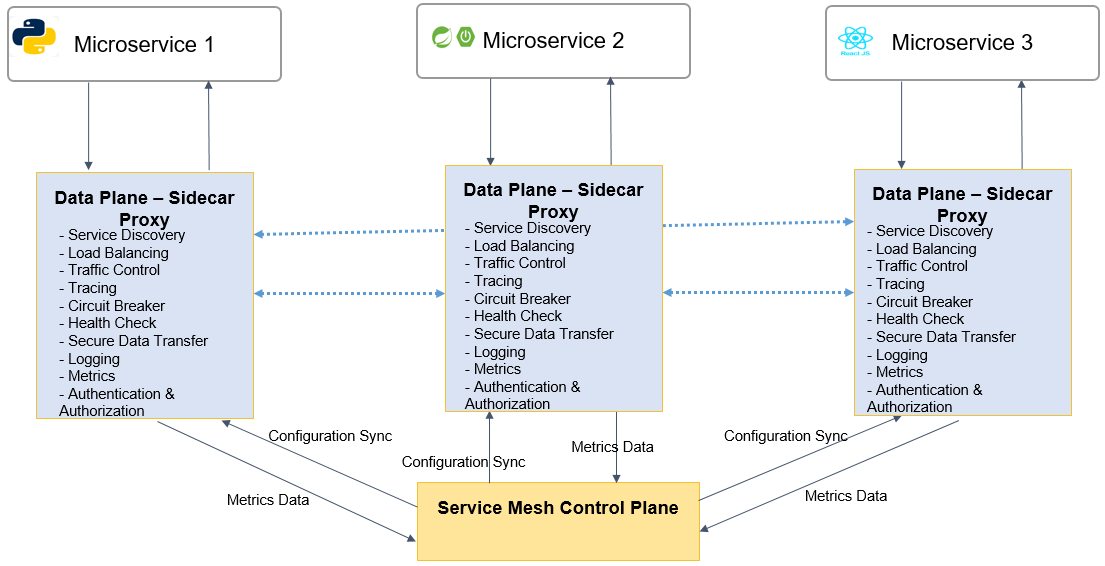

A service mesh architecture consists of network proxies paired with each service in an application and a set of task-management processes. The proxies are called the data plane and the management processes are called the control plane. The data plane intercepts calls between different services and processes them. The control plane is the brain of the mesh that manages configuration metadata, coordinates and manages the behaviour of Data Plane, provides APIs for operations and maintenance personnel to manipulate and observe the entire network. There are a number of open source and enterprise service mesh providers that includes products such as Istio, Linkerd, Consul, AWS App Mesh, Kuma, Kong Service Mesh, Traefik Mesh and Open Service Mesh. Many service meshes use the Envoy proxy on the data plane. The need of service mesh is always tied to the rise of the “cloud native” applications and organization’s adaptability to microservice architecture with API first approach.

Why Service Mesh?

Service Mesh is an optional package which handles the East West traffic, however it is also an essential system to implement in a Kubernetes environment running microservices in different PODS that calls each other. Typically, these cluster of PODS are managed by Kubernetes and protected by an external firewall, however inside the cluster the communication in unsecured which will make your whole applications vulnerable to threats if attacker is inside the cluster.

This can be solved by adding additional security logic to each of the PODS, but then developers also have to worry about integrating other logics such as communication handling between PODS, implementing a monitoring service to each of the PODS for log analysis & performance fixes which makes it very complex. Think of developer’s plight where they must add these logics to each of the PODS , instead they should be focusing on business logic – which goes against the vision of microservices.

Where it can be effective

Service Mesh can take care of the added logics (as shown in above diagram) and adds below benefits to the ecosystem in a centralized control plane and distributed data plane architecture.

| Areas | Benefits |

|---|---|

| Network Resiliency | Service Mesh helps microservices to be more resilient by adding features like retries, timeouts and circuit breakers, load balancing which can help to prevent cascading failures. |

| Interoperability | A Service mesh increases the interoperability with functionality of SDN routing features. |

| Microservice Discovery | Enhanced microservice discovery with centralized Control plane architecture |

| Observability | Enhance the observability capability with service mesh with internal states and health of application, metrics of transaction and load |

| Reliability | Service mesh improves service reliability by offloading the fault tolerant mechanism from application layer so application development team can concentrate more on business features inclusion to application layer. |

| Security | Secure inter microservice communication. Supports both authentication and authorization and enable secured environment for communication |

Where not to use Service Mesh

Service Mesh addresses developer concerns around service-to-service connections, however, they do not help control the complex emergent behaviors that any growing architecture exhibits. The urgency of considering a service mesh depends on your organization’s challenges and goals and there could be enough reasons to eliminate the use of service mesh.

- You have a monolithic architecture where application logic is built into one or two containers instead of several so management if added logic is not a concern

- You have microservices written from some common framework that runs in your organization

- Your Service Mesh is not compatible with your application – you will have to be sure that it supports your application and will not cause any issues

- You have a diverse technology and cannot benefit from the features that service mesh offers simply because you want to keep your options open

Summary

Service Mesh does have a lot of out-of-the-box benefits and features but comes with its own set of challenges that you have to take into consideration before using a Mesh.

You are most likely to use service mesh in an organization using Kubernetes that have lot of microservices written by other people, all talking to each other and you want to make them follow a common architectural pattern.

You are most likely to skip service mesh if you are operating monolith or collection of it that have in-frequently changing communication pattern. Another fact that you are less likely to care about service mesh is when you are into pure business logic implementation as service mesh focusses on operational logic.

Appendix

Some of the open source and enterprise service mesh providers:

- Istio

- Linkerd

- Envoy

- Cilium Service Mesh

- Consul Connect

- Kuma

- Traefik Mesh

- Meshery

- Gloo Mesh

- Grey Matter

- Open Service Mesh (OSM)

- Nginx Service Mesh (NSM)

- Network Service Mesh

- Apache ServiceCombo

- Aspen Mesh

- AWS App Mesh

- OpenShift Service Mesh

- Anthos Service Mesh

About Coforge

Coforge is a global digital services and solutions provider, that enables its clients to transform at the intersect of domain expertise and emerging technologies to achieve real-world business impact. A focus on very select industries, a detailed understanding of the underlying processes of those industries and partnerships with leading platforms provides us a distinct perspective. Coforge leads with its product engineering approach and leverages Cloud, Data, Integration and Automation technologies to transform client businesses into intelligent, high growth enterprises. Coforge’s proprietary platforms power critical business processes across its core verticals. The firm has a presence in 21 countries with 25 delivery centers across nine countries.