Today in IT industry, everyone expects that the environment in which we test the application should be similar to the final deployment environment as possible, if not identical.

This can be achieved by automating the deployment using Docker.

In this blog, we would discuss about docker and how it can be used to create a container and deploy Mule instances. We would also notice that it can be easy to repeat the same with no additional effort.

What is Docker ?

Docker allows us to package an application with all of its dependencies into a standardised unit for software development. Docker containers are based on open standards allowing containers to run on all major Linux distributions and Microsoft operating systems with support for every infrastructure. Docker containers wraps up a piece of software in a complete file system that contains everything it needs to run: code, runtime, system tools, system libraries – anything that can install on a server. This guarantees that it would always run the same, regardless of the environment.

Now how is it different from virtual machines ?

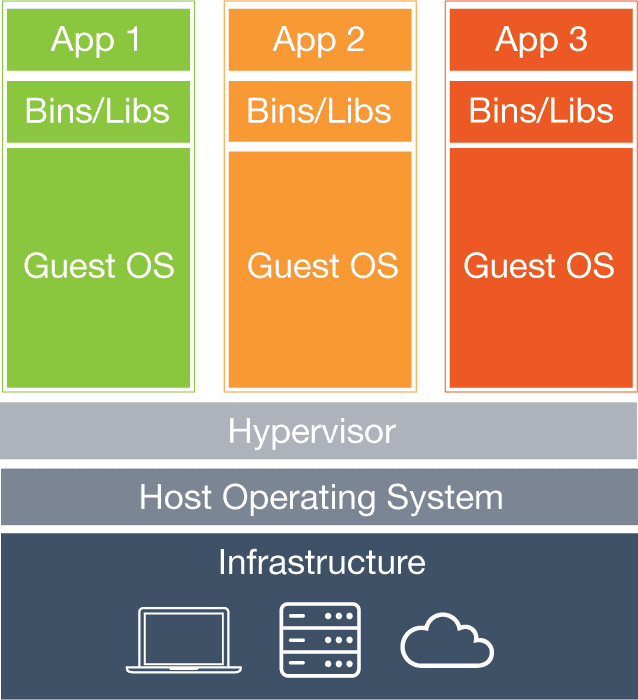

Each virtual machines includes the application, the necessary binaries and libraries and an entire guest operating system - all of which may be tens of GB's in size. If we need 10 instances of MuleSoft ESB, then we would have to replicate the complete set up including the operating system for each instance.

What is vm diagram :

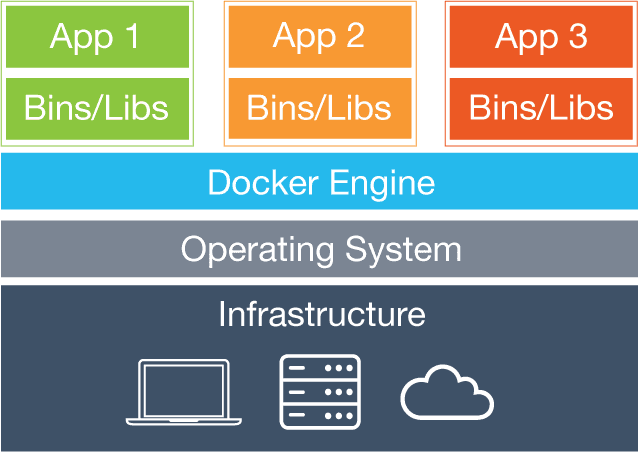

What is Docker Diagram :

On the other hand, Docker containers only includes the application and all of its dependencies, but share the kernel with other containers. They run as an isolated process in user space on the host operating system. They are also not tied to any specific infrastructure so the Docker containers run on any computer, on any infrastructure and in any cloud.

Deployments of MuleSoft ESB with docker

There are various good articles on the internet for getting familiarized with Docker. So we continue under the assumption that the reader already has some knowledge about Docker.

In this blog, we will show on how to create a MuleSoft ESB instance with docker. We will be using a community edition of the runtime which can be replaced with an enterprise version by using appropriate file and updating the Dockerfile in use.

Firstly, we need to create a Dockerfile which is a script and is used to build a Docker base image. It takes the package containing Mule standalone, extracts the files, removes unnecessary content and configures the ports of the Docker base image. This Docker file can be seen as below:

FROM ubuntu:14.04

MAINTAINER govind.mulinti@Coforge.com

RUN apt-get update

RUN apt-get upgrade -y

RUN apt-get install -y software-properties-common

# install java

RUN add-apt-repository ppa:webupd8team/java -y

RUN apt-get update

RUN echo oracle-java7-installer shared/accepted-oracle-license-v1-1

select true

| /usr/bin/debconf-set-selections

RUN apt-get install -y oracle-java7-installer

# MuleSoft EE installation:

# This line can reference either a web url (ADD), network share

or local file (COPY)

ADD https://repository-master.mulesoft.org/nexus/content/repositories

/releases/org/mule/distributions/mule-standalone/3.7.0/

mule-standalone-3.7.0.tar.gz /opt/

WORKDIR /opt

RUN echo "6814d3dffb5d8f308101ebb3f4e3224a mule-standalone-3.7.0.tar.gz"

| md5sum -c

RUN tar -xzvf /opt/mule-standalone-3.7.0.tar.gz

RUN ln -s mule-standalone-3.7.0/mule-standalone-3.7.0 mule

RUN rm -f mule-standalone-3.7.0.tar.gz

# Configure external access:

# Mule remote debugger

EXPOSE 5000

# Mule JMX port (must match Mule config file)

EXPOSE 1098

# Mule MMC agent port

EXPOSE 7777

# Environment and execution:

ENV MULE_BASE /opt/mule

WORKDIR /opt/mule-standalone-3.7.0

CMD /opt/mule/bin/mule

A Docker image is essentially a multi-layer file system. Once the container is running, these layers are flattened to create one cohesive file system. Almost each line of our Docker file creates a layer that is stacked on top of the layer created by previous line.

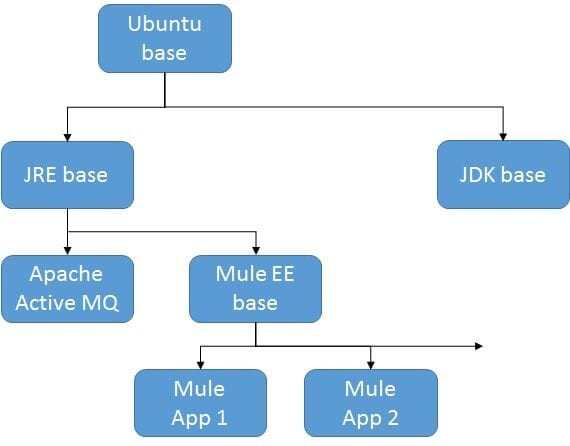

But that is not the final stage, there are more improvements that can to be made. For example, we may like to consider creating intermediate images to improve their re usability which can be referenced internally with the Docker files. The images given below shows the possible hierarchy of Docker images which can be reused for any client, the OS can vary:

Here we are using a standalone community version due to restrictions of enterprise version. If we plan to use the enterprise version then we need to enhance the above docker script to use the required packages and apply appropriate license.

We can go a step further and integrate Docker builds into your continuous integration build system, using Maven or Gradle. This way Docker images are created as part of the build lifecycle, freeing developers to concentrate on creating decent Mule applications.

On a regular basis, we encounter promoting containers between various environments, from development to test or from UAT to Production. Each environment has specific configurations that need to be applied to the application inside the container. Re-building the complete image, each time we want to promote the application, defeats the purpose of using containers. The solution is to build parametrised Docker images. On the other hand, if the containers are changed often, for example every code commit spins up a new Mule container, then the latter approach would be preferable since clean-up file system is required once the container is decommissioned.

Benefits

These images can be provided for testing teams to test is and those would be the as is applications which would be available for production.

Thinking of some scenarios, we can plan accordingly to have docker images on production as well. By this approach we can provision the services as micro services and that can be made available and updates can be delivered with ignorable outages. Other benefits are;

- It should be easy to start a new instance when a need arises and discard it if it is no longer required, should be able to adapt to changes rather quickly and thus reduce errors caused by, for instance, peaks loads and configuration changes.

- Scale out and increase the number of instances to which applications can be deployed.

- Instead of running one instance of some kind of application server, MuleSoft ESB in our case, on a computer, we may require multiple instances to be partitioned, example: performance. High-priority applications run on a separate instance whereas applications which are less critical run on another instance.

- Enable quick replacement of instances in the deployment environment.

- Better control over the contents of the different environments.

If you would like to find out more about how APIs could help you make the most out of your current infrastructure while enabling you to open your digital horizons, do give us a call at +44 (0)203 475 7980 or email us at Salesforce@coforge.com

Other useful links:

Related reads.

About Coforge.

We are a global digital services and solutions provider, who leverage emerging technologies and deep domain expertise to deliver real-world business impact for our clients. A focus on very select industries, a detailed understanding of the underlying processes of those industries, and partnerships with leading platforms provide us with a distinct perspective. We lead with our product engineering approach and leverage Cloud, Data, Integration, and Automation technologies to transform client businesses into intelligent, high-growth enterprises. Our proprietary platforms power critical business processes across our core verticals. We are located in 23 countries with 30 delivery centers across nine countries.